"Now this is what good title writing must mean", I told myself. The decision to click took me a whole split second: I just had to read it... After all: why exactly should self-driving cars kill? I know why taxi drivers want to pulp me up sometimes when I drive my bicycle, but the motivation of self-driving cars is necessarily opaque.

Of course, if one stops to read the the phrase underneath (which I didn’t) things starts to get more mundane, but scary nonetheless: what should the smart car decide if forced with an ethical dilemma? Suppose that there are only two choices: no breaks, a group of people in front of it, a wall on the left and a person on the right. Which way should it swerve?

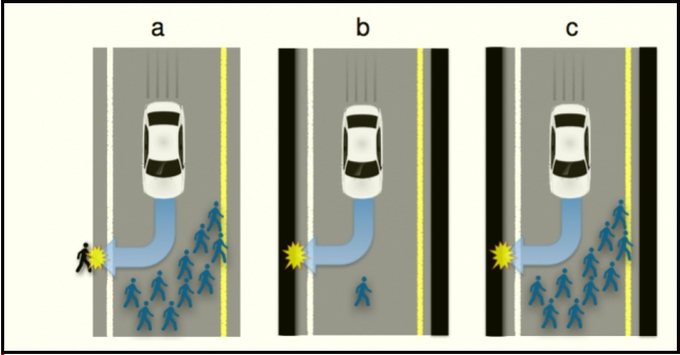

The image above is a version of a well-known (and much discussed) ethical dilemma: the trolley problem. the trolley problem. There are a host of problems and implications stemming from this simple acknowledgement that there are cases where there simply isn’t a win-win situation.

In order to fully appreciate the complexity of the problem, though, I would recommend you to go the original paper from arXiv that launched all the hoopla. It goes without saying that this a terrific examples of how a really interesting scientific research paper gets morphed into an eye-grabbing title, while leaving out important elements of the discussion.

Let me cite some of the most important conclusions presented by the authors just to boost your appetite:

- Manufacturers and regulators will need psychologists to apply the methods of experimental ethics to situations involving AVs and un-avoidable harm.

- Surveys show that people are relatively comfortable with utilitarian AVs, programmed to minimize the death toll in case of unavoidable harm.

- Some of the key aspects future AI should address are 1) the perceived morality of sacrificing the owner/passenger, 2) the willingness of the public opinion to see this self-sacrifice being legally enforced, 3) the expectations that AIs will be programmed to self-sacrifice, and 4) the willingness to buy self-sacrificing AVs.

At ke Solutions we don’t program self-driving cars (yet! ;-)), but we did notice that clients easily overemphasize the ability of technology and programming to solve all problems. Many, many cases where customers simply do not want to accept that software comes down to trade-offs and a really good understanding of the problems you want to solve with it. Security and accessibility, amount of content and navigability, etc. Enough said.

Highly recommend the paper; eye-opening on at least several levels. While you do that, see this 2008 post that sort of anticipates this discussion - "My Mother the Car"!

P.S. Safe driving!

Posting comment as guest.

If you already have an account, please LOGIN.

If not, you may consider creating on. It’s FREE!