Less than a year ago, Geoffrey Hinton was asked in a Redditt Q&A session "What frontiers and challenges do you think are the most exciting for researchers in the field of neural networks in the next ten years?" His answer actually did surprise me with its degree of practicality: "I will be disappointed if in five years time we do not have something that can watch a YouTube video and tell a story about what happened".

Who is Geoffrey Hinton and why is he worth listening to?

Briefly, Hinton is one of the pioneers in the field of AI, neural networks, a one of those giants a future Newton will thank for letting him or her climb onto his shoulders (hopefully without the biting sarcasm and downright nastiness of original witticism). Closer to the point I am trying to make, he is The Man Google Hired to Make AI a Reality - a line I took from a fantastic post by Daniela Hernandez about Hinton and other top researchers that are being co-opted by IT giants in the last couple of years to crack tough problems.

For a busy and very prominent researcher to agree to work for Google - even part time - is a very big deal. Hinton alludes to some of the reasons he accepted when he answered a question about the importance of industrial labs vs. university labs:

But what’s in it for Google? What objective are they so intent of achieving?

Automatic generation of Image or video text descriptions

Look at the image on the left - what do you see? For a human it is trivial to describe images such as these even during childhood. It is hellishly difficult for a computer.

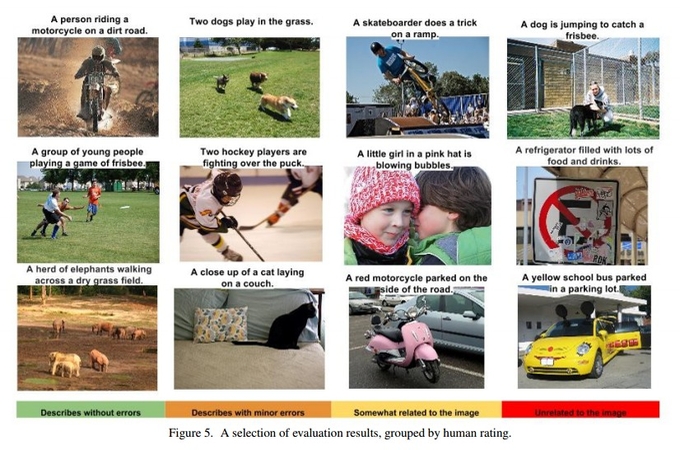

The next image that you see below is taken from research that came out of Google labs late last year: Google research blog had a post about it and last month it appeared in arXiv. It depicts the results of an AI based on neural networks that is attempting to generate automatically natural descriptions of images after a period of learning. The leftmost column has images that posed no problems to the AI. Interestingly, the authors remark in their arXiv paper, the system manages to identify correctly the frisbee disk and infer the play context even if the frisbee is the image is at a very acute angle and thus not so visible.

However, the more you travel towards the rightmost columns, the more you will become flabbergasted about how the AI came to infer its descriptions: I can make a reasonable guess about how it got to the "yellow school bus", but I am at loss about the "girl blowing bubbles". As difficult as this task is, this paper reports what seems to be a significant breakthrough - the neural network approach taken by this team produces good, albeit not perfect, results.

Potential implications coming to mind

A lot of technobabble, you would say. Good for them, good for Google, my respects to this scientist dude, but why would I care?

For users

Image and video searching could suddenly become easy. The way we do it today is really not that efficient. Search engines mostly rely on what humans insert in the ALT descriptions of images, keywords or hashtags or descriptions for videos; essentially, when you search for images you get results based not on images but on text associated with those images. I am intentionally simplifying the picture, but the gist of it is undeniable. We all are bit annoyed when we search for a specific image and have to sift through garbage. Facebook, Google, Microsoft, etc - they all want to crack this problem and be able to understand, categorize and search images and videos.

For owners of websites, SEO specialists, marketers

Even now a lot of websites have improper image or video descriptions that affect their SEO scores. If Hinton is right, we need to look again very carefully at how we deal with image descriptions. Soon search engines and social media platforms might be able to do their own labelling and I guess a lot of webpages will find themselves penalized for discrepancies between their human description and the machine generated description. Think also about the oft-repeated motto "content is king"; so far, by content we mean text, but it needn’t be. If images can be understood by machines, would you want to bet what type of content will be king? Image and video would perhaps require specialized approach from the point of view of SEO.

At ke Solutions we are constantly thinking ahead and take steps to anticipate the immediate future. That is why our clients do not have to scramble to catch up. Do you need your images or videos appraised in terms of their SEO value? We can tell you. Do you want images and videos to be used at their top efficiency? We can do it.

Posting comment as guest.

If you already have an account, please LOGIN.

If not, you may consider creating on. It’s FREE!